The 2020s could see a rapid expansion in dark energy research.

For starters, two powerful new instruments will scan the night sky for distant galaxies. The Dark Energy Spectroscopic Instrument, or DESI, will measure the distances to about 35 million cosmic objects, and the Large Synoptic Survey Telescope, or LSST, will capture high-resolution videos of nearly 40 billion galaxies.

Both projects will probe how dark energy—the phenomenon that scientists think is causing the universe to expand at an accelerating rate—has shaped the structure of the universe over time.

But scientists use more than telescopes to search for clues about the nature of dark energy. Increasingly, dark energy research is taking place not only at mountaintop observatories with panoramic views but also in the chilly, humming rooms that house state-of-the-art supercomputers.

The central question in dark energy research is whether it exists as a cosmological constant—a repulsive force that counteracts gravity, as Albert Einstein suggested a century ago—or if there are factors influencing the acceleration rate that scientists can’t see. Alternatively, Einstein’s theory of gravity could be wrong.

“When we analyze observations of the universe, we don’t know what the underlying model is because we don’t know the fundamental nature of dark energy,” says Katrin Heitmann, a senior physicist at Argonne National Laboratory. “But with computer simulations, we know what model we’re putting in, so we can investigate the effects it would have on the observational data.”

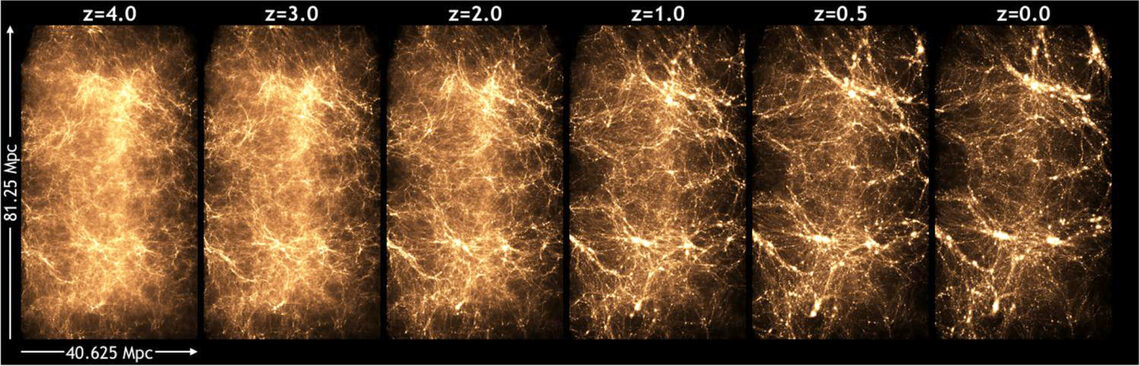

A simulation shows how matter is distributed in the universe over time.

Growing a universe

Heitmann and her Argonne colleagues use their cosmology code, called HACC, on supercomputers to simulate the structure and evolution of the universe. The supercomputers needed for these simulations are built from hundreds of thousands of connected processors and typically crunch well over a quadrillion calculations per second.

The Argonne team recently finished a high-resolution simulation of the universe expanding and changing over 13 billion years, most of its lifetime. Now the data from their simulations is being used to develop processing and analysis tools for the LSST, and packets of data are being released to the research community so cosmologists without access to a supercomputer can make use of the results for a wide range of studies.

Risa Wechsler, a scientist at SLAC National Accelerator Laboratory and Stanford University professor, is the co-spokesperson of the DESI experiment. Wechsler is producing simulations that are being used to interpret measurements from the ongoing Dark Energy Survey, as well as to develop analysis tools for future experiments like DESI and LSST.

“By testing our current predictions against existing data from the Dark Energy Survey, we are learning where the models need to be improved for the future,” Wechsler says. “Simulations are our key predictive tool. In cosmological simulations, we start out with an early universe that has tiny fluctuations, or changes in density, and gravity allows those fluctuations to grow over time. The growth of structure becomes more and more complicated and is impossible to calculate with pen and paper. You need supercomputers.”

Supercomputers have become extremely valuable for studying dark energy because—unlike dark matter, which scientists might be able to create in particle accelerators—dark energy can only be observed at the galactic scale.

“With dark energy, we can only see its effect between galaxies,” says Peter Nugent, division deputy for scientific engagement at the Computational Cosmology Center at Lawrence Berkeley National Laboratory.

Trial and error bars

“There are two kinds of errors in cosmology,” Heitmann says. “Statistical errors, meaning we cannot collect enough data, and systematic errors, meaning that there is something in the data that we don’t understand.”

Computer modeling can help reduce both.

DESI will collect about 10 times more data than its predecessor, the Baryon Oscillation Spectroscopic Survey, and LSST will generate 30 laptops’ worth of data each night. But even these enormous data sets do not fully eliminate statistical error. Simulation can support observational evidence by modeling similar conditions to see if the same results appear consistently.

“We’re basically creating the same size data set as the entire observational set, then we’re creating it again and again—producing up to 10 to 100 times more data than the observational sets,” Nugent says.

Processing such large amounts of data requires sophisticated analyses. Simulations make this possible.

To program the tools that will compare observational and simulated data, researchers first have to model what the sky will look like through the lens of the telescope. In the case of LSST, this is done before the telescope is even built.

After populating a simulated universe with galaxies that are similar in distribution and brightness to real galaxies, scientists modify the results to account for the telescope’s optics, Earth’s atmosphere, and other limiting factors. By simulating the end product, they can efficiently process and analyze the observational data.

Simulations are also an ideal way to tackle many sources of systematic error in dark energy research. By all appearances, dark energy acts as a repulsive force. But if other, inconsistent properties of dark energy emerge in new data or observations, different theories and a way of validating them will be needed.

“If you want to look at theories beyond the cosmological constant, you can make predictions through simulation,” Heitmann says.

A conventional way to test new scientific theories is to introduce change into a system and compare it to a control. But in the case of cosmology, we are stuck in our universe, and the only way scientists may be able to uncover the nature of dark energy—at least in the foreseeable future—is by unleashing alternative theories in a virtual universe.